Some snippets that can't grow into full articles

This week I'm having terrible neck pains, so I have neither the mood nor physique required for writing my weekly blog post. Instead, here's a collection of snippets that I wanted to turn into articles but were too shallow to fit the bill.

I

Standard p-value tests and quantile estimators are obviously limited to quite simplistic models of the world. This is not necessarily because they are bad but because the "real world" is impossible to model.

I believe I heard this put best by Scot in an old blog:

Suppose the people at FiveThirtyEight have created a model to predict the results of an important election. After crunching poll data, area demographics, and all the usual things one crunches in such a situation, their model returns a greater than 999,999,999 in a billion chance that the incumbent wins the election. Suppose further that the results of this model are your only data and you know nothing else about the election. What is your confidence level that the incumbent wins the election? Mine would be significantly less than 999,999,999 in a billion.

More than one in a billion times a political scientist writes a model, they will get completely confused and write something with no relation to reality. More than one in a billion times a programmer writes a program to crunch political statistics, there will be a bug that completely invalidates the results. More than one in a billion times a staffer at a website publishes the results of a political calculation online, ey will accidentally switch which candidate goes with which chance of winning. So one must distinguish between levels of confidence internal and external to a specific model or argument.

I believe this is a good example, but I think it could be extended even to the staunchest fields of study, though to a lesser extent. E.g. I would put any p-value regarding a generic statement about human psychology at most above 0.x in this framework, and any molecular thermodynamics p-value at above 0.00x.

I think most people intuitively agree there is a background error rate that can't be detected in most scientific realms on inquiry. At least until the problem gets into engineering hands where reality kicks in a shows a value for the aggregated errors of the theories contributing to the application.

Alas, where the disagreement comes is what that background error rate ought to be for any given field or subfield of study.

But this background rate is taken into account. Physicists studying materials and chemists studying crystals usually having high certainty in their fields to a religious degree. Molecular biologists and biochemists viewing a theory as simply better than random searching tool for experiments. Astute doctors viewing anything but a massive placebo double-blind controlled trial as almost noise. Good psychologists usually not daring to even speak about the chances of their findings being "true", since such a fuzzy definition for truth is not part of common epistemology.

Everyone knows this on an intuitive level, it's by no coincidence that political sides argue about the socio-economic feasibility of nuclear energy rather than about that of suspending and limiting a fusion reaction in a self-fueled magnetic field inside an extremely complex toroid.

II

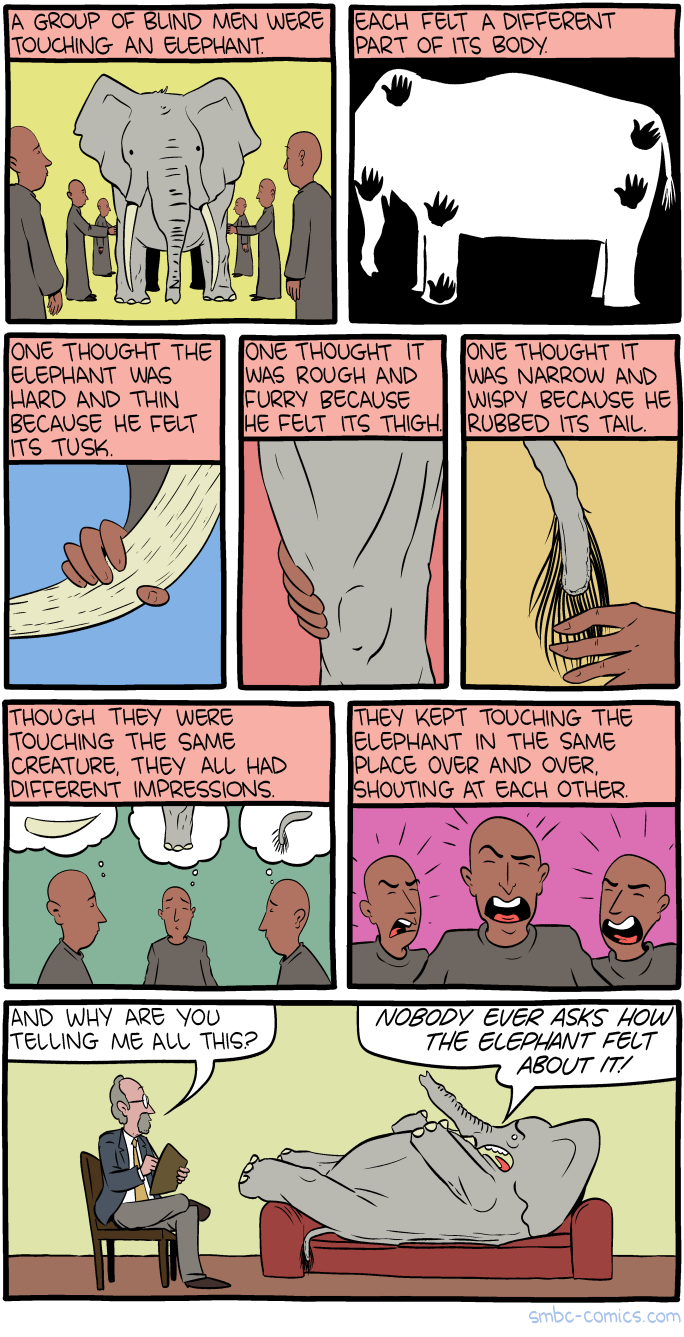

I love the metaphor of the blind men touching an elephant to try and determine its shape.

group of blind men who have never come across an elephant before and who learn and conceptualize what the elephant is like by touching it. Each blind man feels a different part of the elephant's body, but only one part, such as the side or the tusk. They then describe the elephant based on their limited experience and their descriptions of the elephant are different from each other. In some versions, they come to suspect that the other person is dishonest and they come to blows.

Stroustrup uses this metaphor in an iconic talk to describe how people understand C++. The language has grown so far that expertise is niched, even for the very people creating and maintaining the specs and the compilers.

I also like the infinite amount of interpretations one can make by de-metaphorizing the elephant and leaving the story intact:

I really, really want to hear someone tell a joke that includes something about one of the blind men stroking the elephant's dong and C++ metaprogramming.

III

I have 5 "simple" rules based on which I more or less eliminate countries I want to visit:

- Low malaria incidence (< 0.1% of the population with fairly tight errors and/or large malaria-free areas)

- De jure equality between people no matter their phenotype

- Population above 100,000 (i.e not a tax haven city or random island in the middle of nowhere)

- Crime rate of < 0.01% of the population yearly (countries like Iraq, Yemen, and Russia make this cut, it just excludes extremely violent countries)

- No ongoing war or dictatorship constantly enforced via heavy-handed military action (think North Korea)

If more than 1 of these doesn't hold for a country I'd put it in my "no go" bucket, with a few exceptions (e.g. Columbia, Tanzania, Zambia). I find it very surprising that this is enough to exclude over half the countries in the world.

IV

There are some snippets of non-dualistic wisdom that are entrenched within western languages. Chief among those is:

"Discovering who you are"

But also things such as the concept of "free will" the idea of "finding yourself" and the phrase "out of character" (as in, "That shirt was out of character for John to wear").

Interestingly enough, most of these concepts seem to lead us to think of ourselves as a singular entity in the long-run, but most of our short-term ways of talking about ourselves are still dualistic. Which more or less the reverse of how I hear Buddhists talk about it, in that they seem to implicitly accept dualism in the long term (e.g. by introducing morals, and a soul, and afterlives, and reincarnation, and overall using dualist language) ... but rejected in the short term

V

Political correctness, I've finally realized, is synonymous with not bringing up information that forces the discussion of implicit rules.

This is probably the one conceptual analysis realization I've had from reading Lacan & Zizek.

Implicit rules are usually implicit for a reason, they are necessary, or at least feel that way, but they violate rational reasoning or shared moral judgments and thus nobody wants them laid out. They are usually followed out of risk of ostracism and because they 'feel' right, but not enforced in court or any other setting that uses explicit rules.

If you spit out the rule itself people can just say "no, that's not a rule, nobody says that nobody writes it down". However, you can make people acknowledge the rule by making an action that breaks it, without breaking any other rule. This results either in the rule losing power, being made explicit (such that it can be enforced), or some other explicit rule getting bent to a comic extent to "cover" for the implicit rule.

Political correctness consists in not engaging in actions that endanger the implicit rules of your in-group. Not that "action", the way I use the word, includes speech actions, such as prooving, bringing up, or pointing out a given piece of information.

VI

The one time I ventured into pundit territory to make 0-skin-in-the-game predictions was in early March when talking about covid. To my surprise, I think I was basically 100% on the money, from predicting the damage caused by reacting based on poor statistics which could have been avoided to calling out the age-gap in its effects and the => inconsistencies with nr of cases reported and the CFR.

I have received no praise nor prize for this spectacular prediction and I think it's a really good example of the whole "systems can be dumber than any individual". As in, it's a showcase of how bad the medical systems and governments reacted, even though they had more expertise and more data than myself (and, I'm sure, 1000s of other people who reached similar conclusions to me, many of those parts of the very systems that reacted poorly).

Most showcases of this kind are post-factual, but now that most records and timelines are public, we can finally see how badly government systems are doing compared to individuals in terms of understanding certain types of problems (e.g. pandemics). I think COVID-19 might be the first widely-publicized case of such, together with the 2000s financial crash + subsequent money printing part.

VII

I've been trying really hard to convince my remote working friends to just switch tax residency in the EU (not that hard, just buy an apartment, start a company and stay in the country you want to be a resident of more than in any other single country)... and it's surprising how many of them have a blindspot for a literal doubling of their income by cutting down on tax while spending time worrying about single-digit % increases and decreases.

I find many groups of people to be hypocritical. But capitalist libertarians in high-tax countries I find to be most so. Either:

- You are a libertarian, so to help your cause and live a happier life you need to move to a low-police, low-tax, low-government country.

- Doing the above is impossible since you think shared culture, community, and setting down roots have inherent non-monetary value that can't be accurately traded in or represented by a market system, why individual actions are mainly guided by their communities, not themselves... in which case, you've just made an argument for why libertarianism doesn't really work or make sense.

In all honesty, I think that if even 10% of the 1% of libertarians (usually highly independent and 1-10% income bracket) decided to pack up and leave for a 0-15% total tax country (including SS and other such de-facto-taxes), then we might actually see some real positive economic change in the world.

VIII

I've considered opening another blog, with a more streamlined focus on machine learning and epistemology, essentially consisting of:

- What the capabilities of machine learning, and of computing in general, actually are in the 21st century. Giving a framework to think about what is and isn't possible.

- Writing down a pragmatic & skeptic epistemology like that used in the scientific revolution, which would provide the necessary groundwork for figuring out the areas in which our religious behavior stops us from using computers.

- Providing a lot of examples of competing systems and views on ML and epistemology and showing how they fail.

I already have over a dozen articles on the subjects above and the more I write about them the more I feel like this is the one area where I have something new and meaningful to share with people.

My main stop right now is validation for my ideas, in that, unless I manage to somehow validate them in a hard to fake way (e.g. solving predictive problems that are worth a large amount of money), then they are likely just delusional.

In large part, I believe the current malign framework around scientific knowledge and AI is due to quacks that lacked skin in the game.

Fisher became a famous statistician by building models to predict the stock market, his models failed, but somehow they are used all over the place in medicine, psychology, and the likes. MIRI, OpenAI, and associates are the main drivers behind "AI risk awareness", yet to my knowledge, despite being in the most lucrative field of our era, they have managed to produce zero market-relevant research and instead run on donations.

It's the old "self-help book about how to be successful by writing self-help books" trick, but with some extra steps.

Point is, the world seems full of people that think they have something "figured out" but lacking validation, then those people become popular and are often wrong, and the world falls a bit more into darkness. I'd like not to be one of those people, so I need some strong signal that I have some things "right" before I push my ideas harder and in a more focused way.

In the meanwhile, I'm probably going to try and do more exploratory writing, but I'm saddened by the possibility that "meanwhile" might be forever.

Published on: 1970-01-01